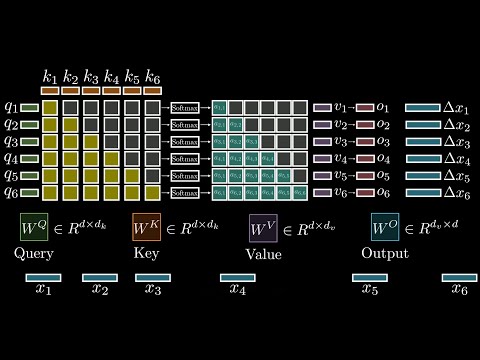

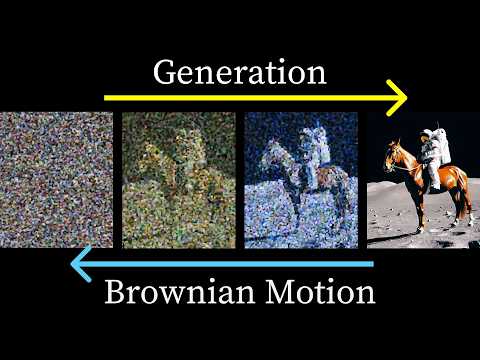

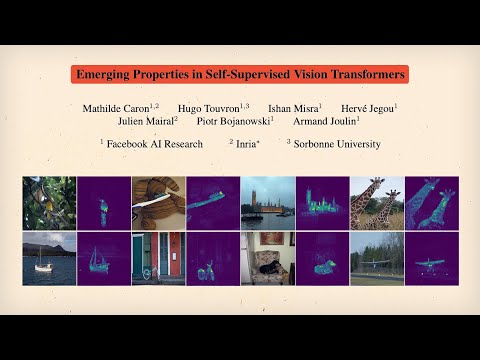

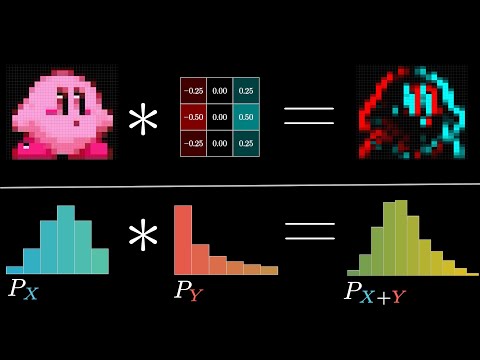

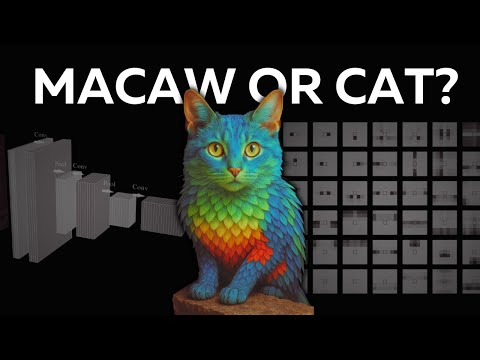

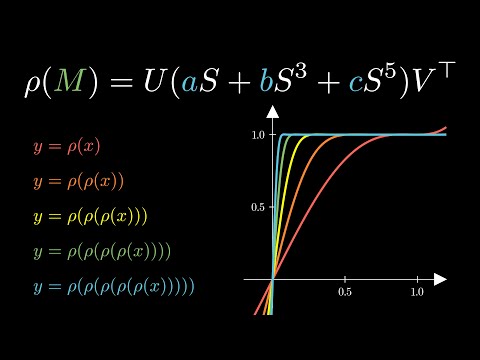

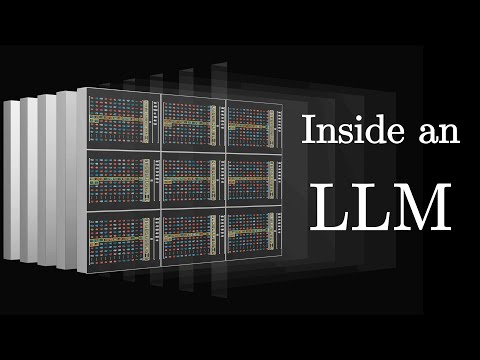

How can we train a general-purpose vision model to perceive our visual world? This video dives into the fascinating idea of self-supervised learning. We will discuss the basic concepts of transfer learning, contrastive language-image pretraining (CLIP), and self-supervised learning methods, including masked autoencoder, contrastive methods like SimCLR, and self-distillation methods like DINOv1, v2, and v3. I hope you enjoy the video! 00:00 Introduction 00:33 Why do features matter? 01:11 Learning features using classification 02:14 Learning features using language (CLIP) 04:09 Learning features using pretask (Self-supervised learning) 05:20 Learning features using contrast (SimCLR) 06:36 Learning features using self-distillation (DINOv1) 12:18 DINOv2 13:54 DINOv3 References: Language-image pretraining [CLIP] Self-supervised learning (pretask): [Context encoder] [Colorization] [Rotation prediction] [Jigsaw puzzle] [Temporal order shuffling] Contrastive learning [SimCLR] Inpainting [MAE] [iBOT] Self-distillation [DINOv1] [DINOv2] [DINOv3] Self-supervised learning [Cookbook] Video made with Manim:

- 50620Просмотров

- 3 месяца назадОпубликованоJia-Bin Huang

How AI Taught Itself to See [DINOv3]

Похожее видео

Популярное

порченный 2

Жена чиновника 3 часть

ДАША ПУТЕШЕСТВЕННИЦА

Classic caliou misbehaves onthe t

Бобр добр

настольная игра реклама

4 серия

Потерянній снайпер2

5 серия

grasshopper code

Walt Disney pictures 2011 in g major effects

макс и катя тележка

Городской снайпер 2

Чудо

Bing but it’s ruined in sora 2

Грань правосудия 4сезон

Perang sampit

Безжалостный гений 3

Deep house electro

РЫЦАРЬ МАЙК

губка боб прабабушку

Wb 2002

Angela White

ну погоди 17-18 выпуск

Жена чиновника 3 часть

ДАША ПУТЕШЕСТВЕННИЦА

Classic caliou misbehaves onthe t

Бобр добр

настольная игра реклама

4 серия

Потерянній снайпер2

5 серия

grasshopper code

Walt Disney pictures 2011 in g major effects

макс и катя тележка

Городской снайпер 2

Чудо

Bing but it’s ruined in sora 2

Грань правосудия 4сезон

Perang sampit

Безжалостный гений 3

Deep house electro

РЫЦАРЬ МАЙК

губка боб прабабушку

Wb 2002

Angela White

ну погоди 17-18 выпуск

Новини